… we are told. Or perhaps not. This was the research reported last week, with varying degrees of credulity, by the BBC here and The (London) Times here (£paywall). This turned out to be a press release about some academic research by Kasey Buckles of Notre Dame University and others. You have to pay USD 5 to get the academic paper. I shall come back to that.

The paper’s abstract claims as follows.

Many papers show that aggregate fertility is pro-cyclical over the business cycle. In this paper we do something else: using data on more than 100 million births and focusing on within-year changes in fertility, we show that for recent recessions in the United States, the growth rate for conceptions begins to fall several quarters prior to economic decline. Our findings suggest that fertility behavior is more forward-looking and sensitive to changes in short-run expectations about the economy than previously thought.

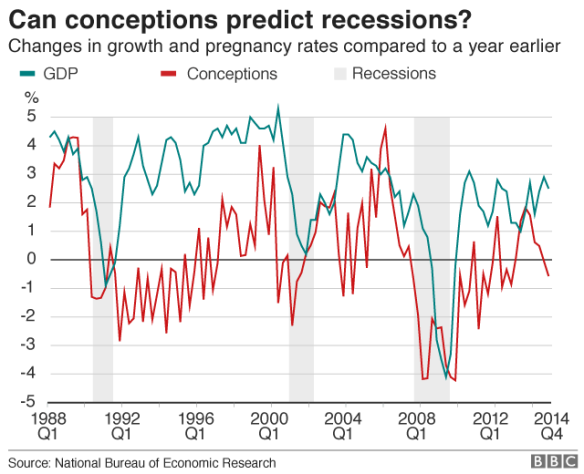

Now, here is a chart shared by the BBC.

The first thing to notice here is that we have exactly three observations. Three recession events with which to learn about any relationship between human sexual activity and macroeconomics. If you are the sort of person obsessed with “sample size”, and I know some of you are, ignore the misleading “100 million births” hold-out. Focus on the fact that n=3.

We are looking for a leading indicator, something capable of predicting a future event or outcome that we are bothered about. We need it to go up/ down before the up/ down event that we anticipate/ fear. Further it needs consistently to go up/ down in the right direction, by the right amount and in sufficient time for us to take action to correct, mitigate or exploit.

There is a similarity here to the hard and sustained thinking we have to do when we are looking for a causal relationship, though there is no claim to cause and effect here (c.f. the Bradford Hill guidelines). One of the most important factors in both is temporality. A leading indicator really needs to lead, and to lead in a regular way. Making predictions like, “There will be a recession some time in the next five years,” would be a shameless attempt to re-imagine the unsurprising as a signal novelty.

Having recognised the paucity of the data and the subtlety of identifying a usefully predictive effect, we move on to the chart. The chart above is pretty useless for the job at hand. Run charts with multiple variables are very weak tools for assessing association between factors, except in the most unambiguous cases. The chart broadly suggests some “association” between fertility and economic growth. It is possible to identify “big falls” both in fertility and growth and to persuade ourselves that the collapses in pregnancy statistics prefigure financial contraction. But the chart is not compelling evidence that one variable tracks the other reliably, even with a time lag. There looks like no evident global relationship between the variation in the two factors. There are big swings in each to which no corresponding event stands out in the other variable.

We have to go back and learn the elementary but universal lessons of simple linear regression. Remember that I told you that simple linear regression is the prototype of all successful statistical modelling and prediction work. We have to know whether we have a system that is sufficiently stable to be predictable. We have to know whether it is worth the effort. We have to understand the uncertainties in any prediction we make.

We do not have to go far to realise that the chart above cannot give a cogent answer to any of those. The exercise would, in any event, be a challenge with three observations. I am slightly resistant to spending GBP 3.63 to see the authors’ analysis. So I will reserve my judgment as to what the authors have actually done. I will stick to commenting on data journalism standards. However, I sense that the authors don’t claim to be able to predict economic growth simpliciter, just some discrete events. Certainly looking at the chart, it is not clear which of the many falls in fertility foreshadow financial and political crisis. With the myriad of factors available to define an “event”, it should not be too difficult, retrospectively, to define some fertility “signal” in the near term of the bull market and fit it astutely to the three data points.

As The Times, but not the BBC, reported:

However … the correlation between conception and recession is far from perfect. The study identified several periods when conceptions fell but the economy did not.

“It might be difficult in practice to determine whether a one-quarter drop in conceptions is really signalling a future downturn. However, this is also an issue with many commonly used economic indicators,” Professor Buckles told the Financial Times.

Think of it this way. There are, at most, three independent data points on your scatter plot. Really. And even then the “correlation … is far from perfect”.

And you have had the opportunity to optimise the time lag to maximise the “correlation”.

This is all probably what we suspected. What we really want is to see the authors put their money where their mouth is on this by wagering on the next recession, a point well made by Nassim Taleb’s new book Skin in the Game. What distinguishes a useful prediction is that the holder can use it to get the better of the crowd. And thinks the risks worth it.

As for the criticisms of economic forecasting generally, we get it. I would have thought though that the objective was to improve forecasting, not to satirise it.

![W._Edwards_Deming[1]](https://anthonycutler.files.wordpress.com/2013/12/w._edwards_deming1.jpg?w=584) Today, 20 December 2013, marks the twentieth anniversary of the death of

Today, 20 December 2013, marks the twentieth anniversary of the death of