Interesting news here that psychology journal Basic and Applied Social Psychology (BASP) has banned the use of p-values in the academic research papers that it will publish in the future.

Interesting news here that psychology journal Basic and Applied Social Psychology (BASP) has banned the use of p-values in the academic research papers that it will publish in the future.

The dangers of p-values are widely known though their use seems to persist in any number of disciplines, from the Higgs boson to climate change.

There has been some wonderful recent advocacy deprecating p-values, from Deirdre McCloskey and Regina Nuzzo among others. BASP editor David Trafimow has indicated that the journal will not now publish formal hypothesis tests (of the Neyman-Pearson type) or confidence intervals purporting to support experimental results. I presume that appeals to “statistical significance” are proscribed too. Trafimow has no dogma as to what people should do instead but is keen to encourage descriptive statistics. That is good news.

However, Trafimow does say something that worries me.

… as the sample size increases, descriptive statistics become increasingly stable and sampling error is less of a problem.

It is trite statistics that merely increasing sample size, as in the raw number of observations, is no guarantee of improving sampling error. If the sample is not rich enough to capture all the relevant sources of variation then data is amassed in vain. A common example is that of inter-laboratory studies of analytical techniques. A researcher who takes 10 observations from Laboratory A and 10 from Laboratory B really only has two observations. At least as far as the really important and dominant sources of variation are concerned. Increasing the number of observations to 100 from each laboratory would simply be a waste of resources.

But that is not all there is to it. Sampling error only addresses how well we have represented the sampling frame. In any reasonably interesting statistics, and certainly in any attempt to manage risk, we are only interested in the future. The critical question before we can engage in any, even tentative, statistical inference is “Is the data representative of the future?”. That requires that the data has the statistical property of exchangeability. Some people prefer the more management-oriented term “stable and predictable”. That’s why I wished Trafimow hadn’t used the word “stable”.

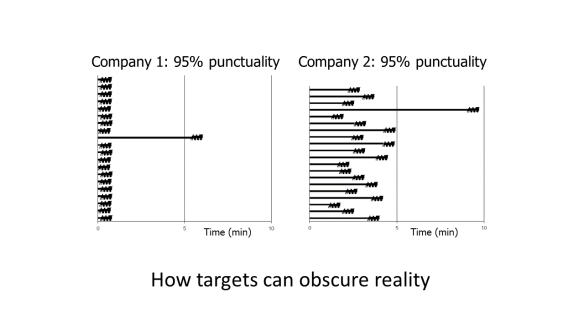

Assessment of stability and predictability is fundamental to any prediction or data based management. It demands confident use of process-behaviour charts and trenchant scrutiny of the sources of variation that drive the data. It is the necessary starting point of all reliable inference. A taste for p-values is a major impediment to clear thinking on the matter. They do not help. It would be encouraging to believe that scepticism was on the march but I don’t think prohibition is the best means of education.